The Opportunity

Clinicians and staff were using public ChatGPT, creating significant exposure risk for PHI and operational data. The client needed a private, compliant AI platform aligned to clinical workflows and governed under their internal security controls.

Key Challenges

No safe AI platform for clinical operations: Clinicians and staff were using public AI tools with zero control over safety settings, creating high risk for patient data exposure, PHI mishandling, and compliance violations (e.g., HIPAA).

High licensing costs for public AI tools: Clinic‑wide usage would cost 3–5× more per patient encounter than using a centrally managed internal platform tuned for healthcare workflows.

No administrative oversight: Compliance and IT had no visibility into how AI was used, what prompts were submitted, or whether usage complied with internal privacy and security policies.

Inconsistent clinician experience: Different departments used different tools, leading to fragmented clinical workflows and wasted time during patient intake and documentation as staff managed multiple external platforms.

The Process

Step 1: Discovery & Scope

Mapped current workflows and defined success metrics for a private-cloud AI deployment.

Step 2: Security & Compliance Assessment

Assessed data flows, privacy controls, and HIPAA/GDPR requirements.

Included design of data ingress/egress rules, identity controls, and audit logging frameworks.

Step 3: Architecture & Infrastructure Design

Built the private-cloud blueprint on Azure with strict data residency, encryption, and observability capabilities.

Step 4: Model Development & Fine-Tuning

Created domain-specific prompts, enabled private-data fine-tuning, and validated behavior against governance rules.

Step 5: Deployment & Enablement

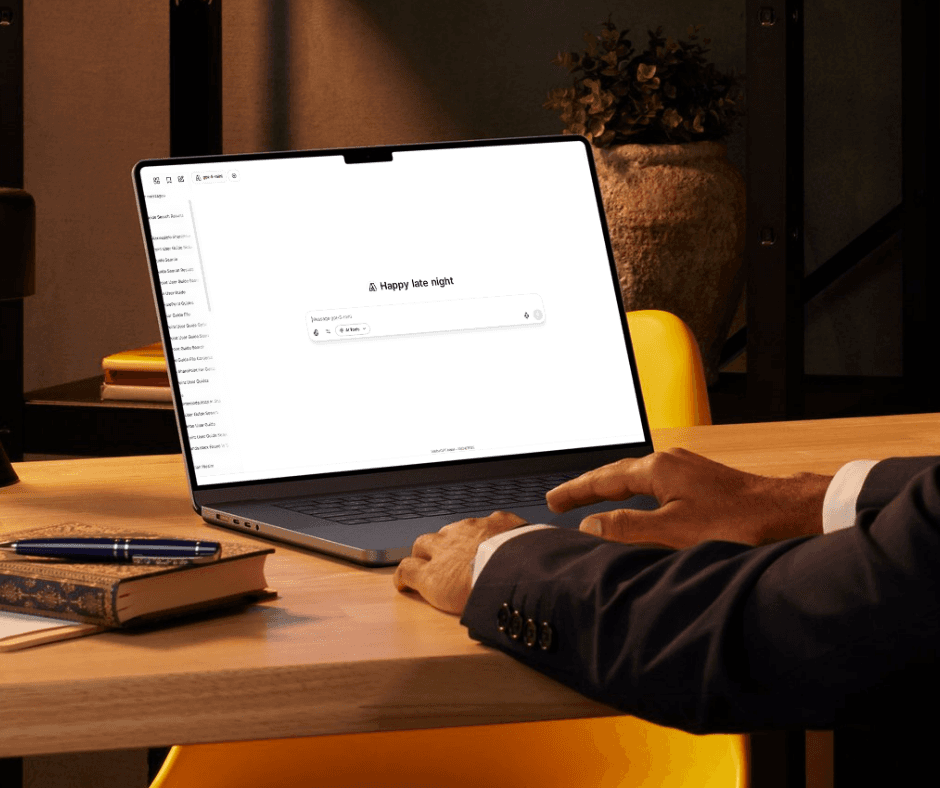

Launched the platform in Azure, integrated channels (as seen on UI screens on pages 5–6), and trained admins and users.

The Opportunity

Clinicians and staff were using public ChatGPT, creating significant exposure risk for PHI and operational data. The client needed a private, compliant AI platform aligned to clinical workflows and governed under their internal security controls.

Key Challenges

No safe AI platform for clinical operations: Clinicians and staff were using public AI tools with zero control over safety settings, creating high risk for patient data exposure, PHI mishandling, and compliance violations (e.g., HIPAA).

High licensing costs for public AI tools: Clinic‑wide usage would cost 3–5× more per patient encounter than using a centrally managed internal platform tuned for healthcare workflows.

No administrative oversight: Compliance and IT had no visibility into how AI was used, what prompts were submitted, or whether usage complied with internal privacy and security policies.

Inconsistent clinician experience: Different departments used different tools, leading to fragmented clinical workflows and wasted time during patient intake and documentation as staff managed multiple external platforms.

The Process

Step 1: Discovery & Scope

Mapped current workflows and defined success metrics for a private-cloud AI deployment.

Step 2: Security & Compliance Assessment

Assessed data flows, privacy controls, and HIPAA/GDPR requirements.

Included design of data ingress/egress rules, identity controls, and audit logging frameworks.

Step 3: Architecture & Infrastructure Design

Built the private-cloud blueprint on Azure with strict data residency, encryption, and observability capabilities.

Step 4: Model Development & Fine-Tuning

Created domain-specific prompts, enabled private-data fine-tuning, and validated behavior against governance rules.

Step 5: Deployment & Enablement

Launched the platform in Azure, integrated channels (as seen on UI screens on pages 5–6), and trained admins and users.

The Opportunity

Clinicians and staff were using public ChatGPT, creating significant exposure risk for PHI and operational data. The client needed a private, compliant AI platform aligned to clinical workflows and governed under their internal security controls.

Key Challenges

No safe AI platform for clinical operations: Clinicians and staff were using public AI tools with zero control over safety settings, creating high risk for patient data exposure, PHI mishandling, and compliance violations (e.g., HIPAA).

High licensing costs for public AI tools: Clinic‑wide usage would cost 3–5× more per patient encounter than using a centrally managed internal platform tuned for healthcare workflows.

No administrative oversight: Compliance and IT had no visibility into how AI was used, what prompts were submitted, or whether usage complied with internal privacy and security policies.

Inconsistent clinician experience: Different departments used different tools, leading to fragmented clinical workflows and wasted time during patient intake and documentation as staff managed multiple external platforms.

The Process

Step 1: Discovery & Scope

Mapped current workflows and defined success metrics for a private-cloud AI deployment.

Step 2: Security & Compliance Assessment

Assessed data flows, privacy controls, and HIPAA/GDPR requirements.

Included design of data ingress/egress rules, identity controls, and audit logging frameworks.

Step 3: Architecture & Infrastructure Design

Built the private-cloud blueprint on Azure with strict data residency, encryption, and observability capabilities.

Step 4: Model Development & Fine-Tuning

Created domain-specific prompts, enabled private-data fine-tuning, and validated behavior against governance rules.

Step 5: Deployment & Enablement

Launched the platform in Azure, integrated channels (as seen on UI screens on pages 5–6), and trained admins and users.

Our Solution

Ajaia developed a HIPAA-compliant AI chat platform deployed entirely within the client’s private Azure environment. The system includes domain-specific prompting, private and synthetic data fine-tuning, secure knowledge routing, and strict governance controls to ensure every interaction remains fully contained. A centralized admin console allows IT and compliance teams to manage access, enforce retention and privacy policies, and monitor usage across clinical and operational workflows.